System administrators think we live in a world governed by Newtonian physics where, as Master Yoda would say, "a bit either a one or a zero is." The truth is that today’s datacenter is becoming more and more a quantum reality where there are no certainties, just probabilities. Any time we write data to some medium or another, there’s some probability that we won’t be able to read that data back.

I came to this realization as one of my fellow delegates at Storage Field Day 5 asked the speaker describing EMC’s XtremIO about how its deduplication scheme handled hash collisions. Having argued this point to what I thought was a logical conclusion when deduplication first arrived in the backup market four years ago, my first reaction is to haul out the math like I did in the now-seminal blog post When Hashes Collide.

I then realized that the problem wasn’t just that people weren’t worried about the probability that their data might get corrupted; they were worried about the possibility that their data might be corrupted as if that possibility didn’t even exist with their current solutions. We have to stop thinking of data the way physicists thought about the universe before quantum mechanics raised its complex head. Newton, through his marvelous gravity equation, could calculate the orbits of the planets and predict eclipses. However, when we get to the quantum scale, things get a lot more complicated.

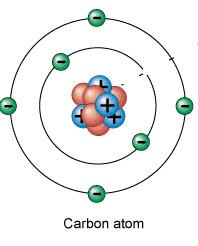

Those of you who remember any of your high school or college physics think of an atom as looking like a miniature solar system with electrons in circular orbits with discrete locations, like the planets.

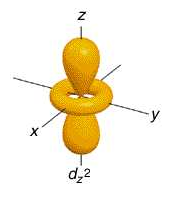

Quantum mechanics, however, tells us that we can never actually know where any given sub-atomic particle is at any given time. At best, the Schrödinger equations let us create a map of the probabilities of an electron being in specific places. At the quantum level, basically all we know are probabilities -- a fact that so offended Albert Einstein that despite being an agnostic, he famously remarked, “God does not play dice with the universe.”

Electron probability maps look more like this:

Or more accurately, like this:

Well folks, all sorts of things -- from external electromagnetic waves to sympathetic vibrations from adjacent disk drives -- cause bits to randomly change in our storage media and as they're crossing our networks.

A near-line disk drive has an error rate of 1 in 10^15, which means once in every 10^15 times it reads a sector, it will either fail or return the wrong data in an undetected error. That’s a 0.6% probability of an unrecoverable error for each full read or write of a 6TB drive. Now 0.6% isn’t that big a probability of data loss, but it’s big enough that networks and storage systems use checksums and other hash functions to detect errors and allow them to re-read, or recalculate, data from the other drives in the RAID set.

As I calculated in "When Hashes Collide," the odds of a hash collision in a 4PB dataset using 8KB blocks is 4.5x10^26. Since the MTBF (mean time between failures) of an enterprise hard drive is about 2 million hours, the odds of both drives in a RAID 1 set failing within the same hour is 2x10^6 squared or 4x10^12, which is 10^14 (100 billion for those in the US that need the translation) times more likely than data being corrupted due to a hash collision.

New technologies such as deduplication introduce new failure modes, like a hash collision. If you worry about the possibility of this new failure mode without taking the quantum mechanical view of weighing the probability of that failure mode compared to the risks you are already taking, and consider reasonable, the new technology will look dangerous when it’s actually safer than what you’re already doing.