Last month I discussed how to choose "the best" storage, which always depends on defining your requirements. Reader ClassC made a comment about the proliferation of industry buzzwords, which makes it difficult to evaluate actual performance. How can you tell if a certain product or technology will meet your goals?

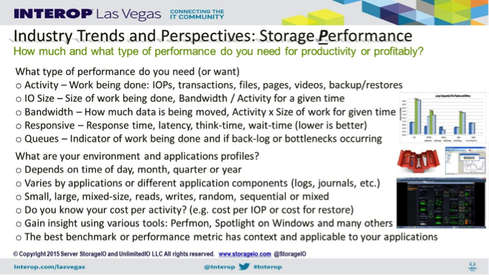

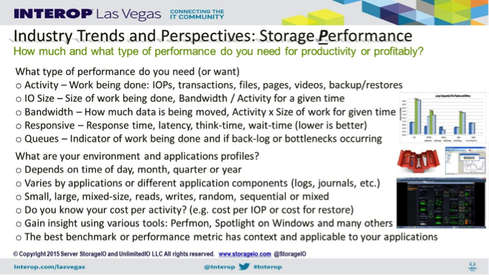

Often when I discuss mainstream applications with people, the perception is that bandwidth only applies to big data and analytics, video, and high-performance compute (HPC) or supercomputing applications such as those used in the seismic, geo, energy, video security surveillance, or entertainment industries. The reality is that those applications can be bandwidth or throughput intensive, but they can also require a large number of small I/Os that need many IOPs to handle metadata related processing. Even bulk storage repositories for archiving, solutions using scale-out NAS, and object storage have a mix of IOPs and bandwidth.

Likewise, while traditional little data databases and file sharing have had smaller bandwidth needs due to their smaller I/O sizes, those I/Os are getting larger. Sure, you will still find 4K-sized I/Os, but those that are 16K, 32K, 64K and larger are becoming more common, particularly as more caching and buffering are done. The effect of caching, buffering, read-ahead, write-behind can result in better I/O performance closer to the application, pushing larger I/O demands further down the stack.

Likewise, many applications rely on more than just a database or file system for their data needs. For example, database imports or exports result in larger I/O operations. Archiving, backup/restore or other data protection operations, along with file sync and share, can also place more demand on applications. Not all environments and applications are the same. And while they may be interesting to talk about, not all storage technologies – old or new -- are best for every scenario.

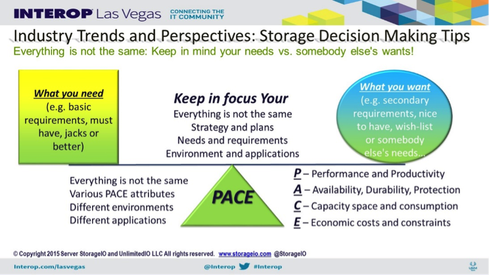

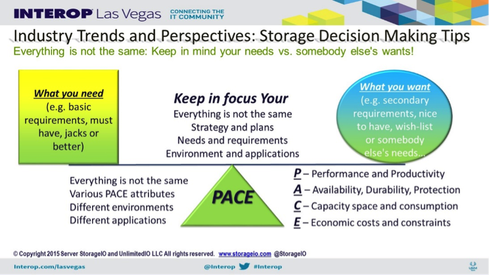

You must look at each application and its PACE requirements: Performance, Availability, Capacity and Economics. These will vary even for different parts of an application, system or environment. Every application has some PACE attributes you can measure. When it comes to performance, it’s not always about IOPs or activity, particular with smaller I/O sizes for which bandwidth and latency are a concern.

If your environment and applications lean toward medium to smaller I/O (IOPs, transactions, gets, puts, reads, writes), then you shouldn't focus on storage solutions for streaming bandwidth. Likewise, if your application needs are generally for 128K, 1MB, 10MB or bigger I/Os that are reads or writes, you shouldn't focus on very small random reads or writes. The same thing applies to latency and other metrics.

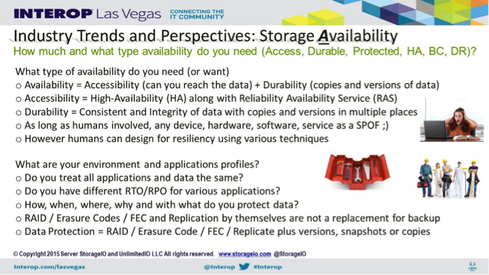

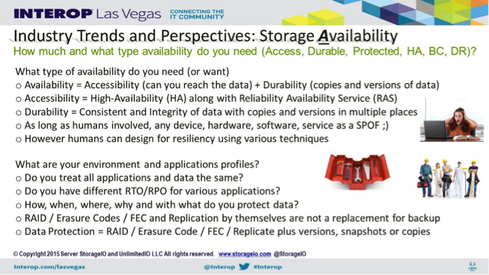

Availability also varies. This includes both access and durability, including protection copies – including archives, backup/restore, clones, snapshots, versions, replication and mirroring -- as well as security, business continuity and disaster recovery.

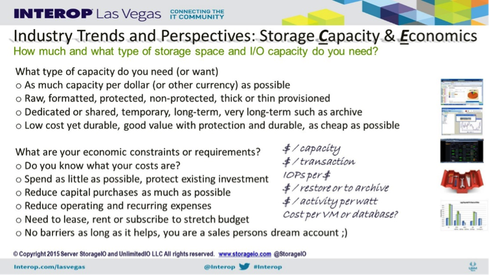

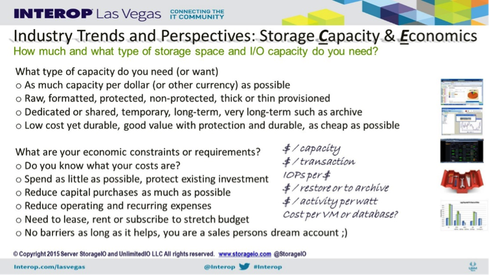

Capacity is another major criterion, both in terms of raw as well as formatted usable capacity, along with corresponding data footprint reduction (DFR) capacities. Common DFR techniques, technologies and capabilities include archiving, compression and consolidation, data management, dedupe and deletion, space-saving snapshots, thin provisioning, and different RAID levels including advanced parity derivatives such as erasure coding or forward error correction.

Economics are always a concern, however you must make more than just a simple cost-per-capacity calculation. If you have performance concerns, than how many IOPs or how much bandwidth or how low of response time can you get per dollar spent?

Apply the same questions to availability: How much resiliency do you need for accessibility and to ensure durability? Also keep in mind the 4 3 2 1 rule. Keep at least 4 copies, with at least 3 versions (at different time intervals), with at least 2 of those copies on different storage systems, servers or media, and at least one of those off-site.

Remember that one size, approach or technique does not fit every environment or application. Understanding how different storage systems, solutions and software are optimized for various applications is also important. This requires moving beyond the block, file and object discussion to how those solutions are optimized for large or small I/O activity, reads or writes, and random or sequential activity.

While an object storage system can be good for addressing economics with durable capacity, it may not be good for databases, key value or other applications. Some solid-state solutions may be optimized for smaller I/O activity, others for bandwidth, and yet others for mixed workloads. Only finding and keeping your focus on PACE and balancing what you need with what you want will help you arrive at the most appropriate solution.