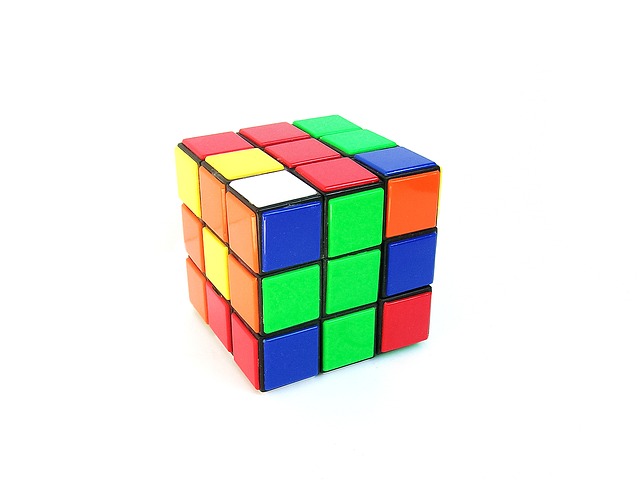

When most people attempt to solve a Rubik’s Cube, they pick a color and complete one face of the cube before moving on to the next color. While this approach is fun and has the appearance of working, it is ultimately doomed for failure. That is because while one side of the cube is being addressed, the other five are being thrown into chaos.

Similarly, components in a virtual data center are intertwined like the sides of a Rubik’s Cube. Seemingly isolated changes in one part of the IT infrastructure can have massive side effects elsewhere. For example, a network change might result in bad SAN behavior. Or, the introduction of a new virtual machine might impact other workloads, both physical and virtual, residing on a shared storage array.

Fundamental to the success of a virtual data center are its shared components. A virtual data center allows operating systems and applications to be decoupled from physical hardware; this in turn allows workloads to move around dynamically, mixing and matching workload to available resources. This provides better ROI and arguably benefits the quest for business continuity. But it’s this dynamism that makes it so difficult to solve a problem when it arises.

Due to the sheer complexity of today’s data center, troubleshooting is typically done per layer. This is an interesting challenge in the world of virtual data centers, where more virtual machines and workloads are introduced daily, with varying behavior, activity and intensity. While context is critical for VM troubleshooting, it is very hard to attain because the hypervisor absorbs useful information from the layers below it. In addition, applications running on top of the hypervisor are very dynamic, which makes traditional monitoring and troubleshooting methods inadequate. You need to take a step back and ask, “Are my current monitoring and management tools providing an answer to a single side of the cube, or are they providing enough perspective to solve the whole puzzle?”

The only way to solve all sides of your data center management problem is to use big data analytics, which have been changing the way things operate for years. Walmart and Target, for example, were able to correlate many data points to accurately predict customer behavior. Similarly, bridges are equipped with sensors and big data analytics to identify changes in heat signatures, vibrations and structural stress to prevent mechanical and structural failures. With this in mind, IT pros should use the power of big data analytics to improve results in their own virtual data centers.

Applying big data analytics inside the hypervisor taps into the vast collection of data present, with insight into application, storage and other infrastructure elements. You can create a context-rich environment that provides an in-depth understanding of the layers on top and below the hypervisor. For example, you can get unprecedented insight into workloads generated by virtual machines, and how they impact lower level infrastructure, like storage arrays. You can discriminate workloads from one another, and understand how the infrastructure reacts to these workloads. This, in turn, helps developers optimize their code to better align to the infrastructure, which then lets infrastructure teams optimize their systems as needed.

With big data analytics inside the hypervisor, everyone wins. You can view your data center in a holistic fashion, instead of solving individual problems one at a time. Don’t be puzzled by complex data center design challenges. Solve them with analytics.