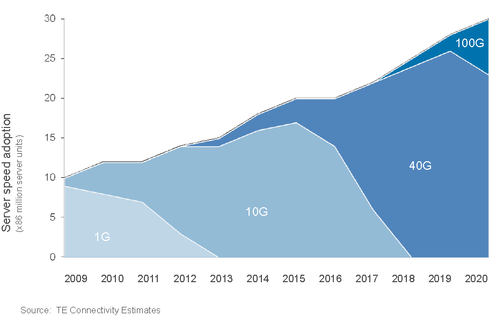

Data centers across the globe are running at 10 gigabit-per-second (Gbps) speeds and moving to 40 Gbps or 56 Gbps, using InfiniBand or Ethernet interconnects. Although 10 Gbps speed has been in adoption for years and is sometimes viewed as "enough" by companies, the jump to 40 Gbs and 56 Gbs occurred much faster than the industry expected, signaling that 100 Gbps may be needed sooner rather than later.

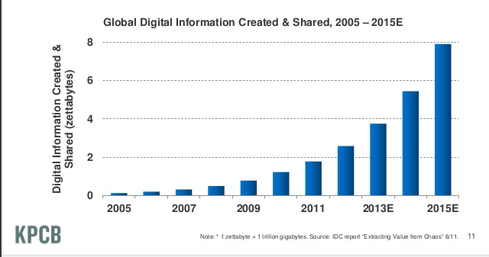

One indicator is the growth of data consumption, attributed to smartphones, social media, video streaming, and big data, all of which require big pipes to cope with the huge quantities of data that are being transferred. Twitter's massive slowdown when Ellen DeGeneres posted her selfie from the Academy Awards earlier this year was just one recent example of the need for more bandwidth. In fact, the amount of global digital content has exploded in the past five years.

Data centers are required to pass tremendous amounts of data back and forth between tens of thousands of nodes, without bottlenecks that can slow the process and ultimately cause gridlock. Video streaming and social media sites like Twitter, for example, need fast connectivity to avoid congestion and ensure that users avoid delays in streaming and updates.

Today's data centers also require reduced amounts of hardware, but with higher performance technology that can efficiently handle multiple large data transfers at one time, ultimately increasing bandwidth and reducing latency and operation costs.

100 Gbps adoption

In its forecast of the data center switch market, analysts at Crehan Research projected annual growth rates of more than 100% for sales of data center switches that will make 40 Gbps and 100 Gbps speeds the standard. The majority of the growth in data center switches sales will come from systems offering 40 Gbps and above for links within the data center and network backbone. Sales of 40 Gbps and above make up a little more than 10% of all data center switches this year, but could approach 70% by 2017.

A recent survey of IT executives emphasized the growing demand for increased network bandwidth as one of the most critical issues facing data centers. Virtualization, cloud computing, big data, and the convergence of storage and data networks were the key factors. Moreover, the exponential growth in data and in the number of devices that generate and consume data, as well as the rapid growth in applications that require fast access to data, drive the need for 100 Gbps.

Consequently, we believe that the transition to 100 Gbps will occur much sooner than estimated by the analyst firms. As evidence, most of the IT executives surveyed were looking to deploy 100 Gbps now or in the near future to improve their data centers' I/O performance.

Data center architectures evolving

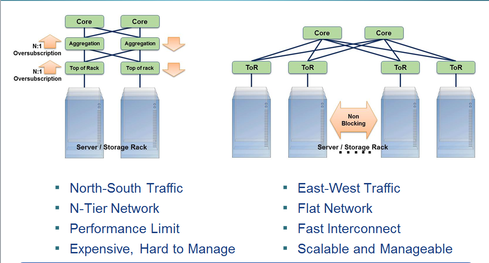

Data centers have grown to become more modular, reaching up to thousands of VMs over the host, and networks are shifting from the traditional three-tier model (top-of-rack/aggregation/core) to flattened (leaf/TOR-spine/core) topology. These changes imply a change in traffic from a north-south orientation to an east-west orientation and consequently, 75% of data center traffic is now east-west.

Traditional north-south traffic uses the first generation of 100 Gbps interconnect, which involves a few ports using high-power and high-cost SR10 (10 X 10 GbE) big form-factor connectors. These core switches have become a bottleneck, serving too few ports with poor price/performance. The transition to an east-west traffic topology fixes this problem, enabling more data to run between multiple servers, scale easily and use faster and cheaper pipes in gigabits per second.

This next generation 100 Gbps interconnect better fits modern data center needs. As part of this change, new 100 Gbps low-power, small form factor QSFP28 copper cables were introduced earlier this year, and will reach the mass market (together with optic solutions) in 2015.

Cloud impact

There are market segments, such as high-performance computing or financial trading, that require the fastest possible interconnect and therefore adopt new interconnect speeds immediately upon introduction. On the other hand, traditional data centers have shown hesitance to move to new speeds.

For example, 10 Gbps Ethernet was first demonstrated in 2002, but it wasn't until 2009 before it was first adopted, and it only really started to ramp up in 2011. This is primarily due to the higher cost of equipment for the higher speed (even though on a per gigabit-per-second basis, it could be more cost effective), the possible need to modify the data center infrastructure, and the higher costs of power and maintenance. These arguments are still valid for those traditional data centers.

Recently, though, there has been a shift toward cloud and web 2.0 infrastructures. Traditional data centers are being converted to large-scale private clouds, and web 2.0 applications and public clouds have become an integral part of our lives. These new compute and storage data centers require the ability to move data faster than ever before -- to store, retrieve, analyze, and store again, such that the data is always accessible in real-time.

As a result, adoption of 10 Gbps, and 40 Gbps, and higher speeds has accelerated. Companies have already started to create 100 Gbps links by combining 10 lanes of 10 Gbps as an interim solution in advance of the availability of the more traditional aggregation, which will offer four lanes of 25 Gbps.

As there are now more markets driving the need for speed, the economics of scale will empower even the traditional markets to take advantage of these new technologies, enabling better utilization and efficiency in the data center.