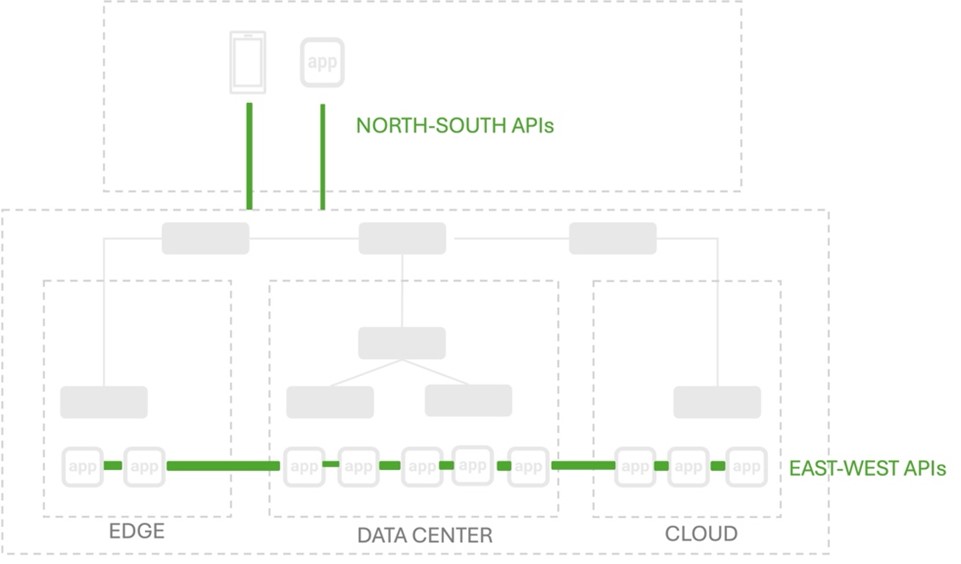

For the last decade or so, east-west traffic was just the traffic within a container cluster. That is the communication that happens between microservices, usually in Kubernetes. Service Mesh is an example of a solution that emerged to address the special need for security and visibility of east-west traffic.

But with organizations not only operating applications in a multicloud environment but deploying multiple components of an application in multiple environments (a.k.a. hybrid apps), east-west traffic is no longer contained by the cluster. It reaches across network boundaries, across the big bad Internet.

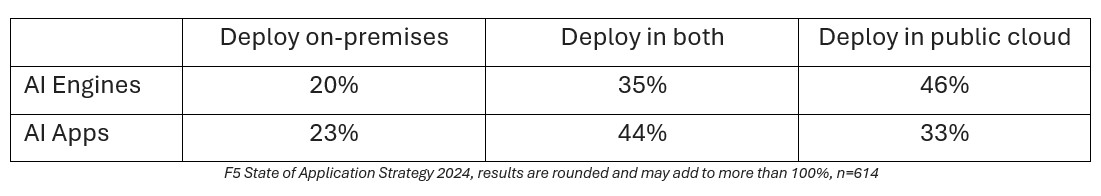

Now, just to make things even more exciting, our research tells us that the AI applications organizations are planning to deploy will be mostly hybrid. That is, while part of the application may be deployed in a public cloud (likely the LLM or ML model), other components (data sources, interfaces, agents, etc.) may reside on-premises. This is particularly true of components that augment generic LLMs with domain or business-specific data by employing a Retrieval-Augmented Generation (RAG) pattern.

That’s not conjecture. We asked everyone where they planned to deploy AI Engines (LLMs, ML Models) and AI Apps (apps that use the AI engines) in our annual research, and that's what respondents told us.

So, if the apps and engines are deployed in different places, how do they communicate? Thanks for asking!

The answer is, of course, APIs. There is no question at this point that APIs are going to be one of the most important parts of AI applications, nor that their security will be paramount. And not just regular old APIs (REST and JSON) but APIs supporting data sources (vector databases) and knowledge graphs (GraphQL). The delivery and security of all sorts of APIs is going to be needed on the new east-west data path that traverses core, cloud, and even edge.

Hybrid changes everything

Distributed applications today are not just about deploying different tiers (presentation, logic, data) in different locations but about deploying different components in the same tier in different locations. Microservices may have broken up the network, but hybrid is breaking up the data path by tossing application components to the four winds.

That means it’s not just about the regular old network now; it's about the application network. The top of the network stack, where apps and APIs communicate. The services that deliver and secure those apps and APIs must also be distributed.

If you haven’t heard about multicloud networking (MCN) yet, you might be one of the 4% of respondents in our annual research that operates only on-premises and therefore have no challenges trying to wrangle with multicloud complexity.

But for the rest of you, multicloud complexity is a real thing, and multicloud networking is one of the ways you can begin taming that beast. That's because MCN creates a secure, consistent network experience across core, cloud, and even edge. Put another way, MCN secures the new east-west highway between applications.

And because the ‘cars’ on that highway are largely APIs, that MCN solution will need to offer API Security, as well.

Ultimately, the reality of multicloud and its ensuing complexity is driving app delivery and security to evolve. And not just to include APIs. In much the same way we watched the ADC evolve from basic proxies, we are seeing the emergence of distributed app delivery and security from MCN. The result will be a platform capable of not only connecting core, cloud, and edge at the lower network layers but also securing the apps and APIs traversing the upper layers of that network.

Because the network doesn't stop at layer 3 (really, it doesn't), it goes all the way up the stack to layer 7.

Related articles: