Over the last year or so, major outages at cloud, Internet, and content delivery network providers significantly disrupted operations at businesses ranging from local mom-and-pop stores to international companies.

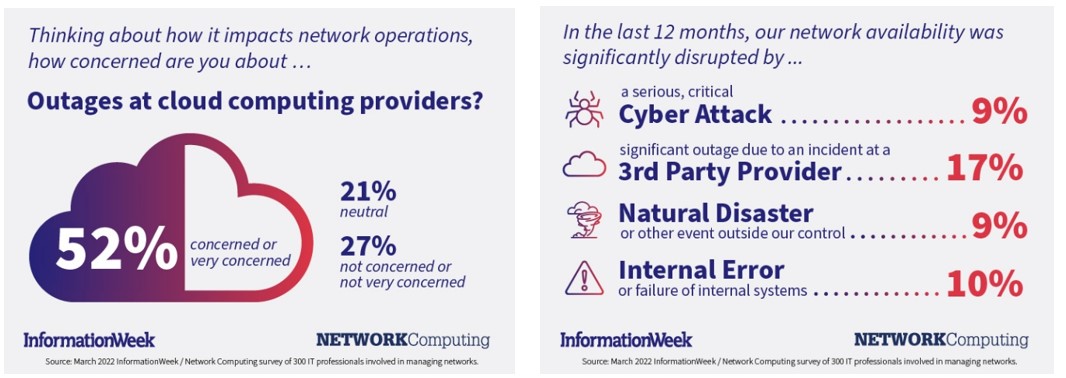

Anxiety about such outages was high among the respondents to this year’s InformationWeek/Network Computing survey of 300 IT professionals involved in managing networks. More than half of the respondents said they were concerned or very concerned about the impact of cloud outages on their network operations. Such concerns were justified in that the leading cause of network availability in the last year was a significant outage due to an incident at a third-party provider.

In a small number of outages, enterprises had a way to minimize the impact. For example, during the June 2022 Microsoft Azure and M365 Online 12-hour outage, most east coast companies served through the impacted Virginia data center could not access services. However, those companies with premium always-available or zone-redundant services in that region were not impacted.

That gets to a point raised by some industry experts who believe you can have a cloud that is reliable, fast, or low cost—but you can only have two of the three. In the June incident, companies had the option to trade off reliability for cost (i.e., the cost of the premium services).

A need to know what’s happening

Unfortunately, enterprises had no workaround for many of the outages over the last year that had the greatest impact. Most were caused by updates gone wrong or configuration changes by the providers. And many were global in nature. Things like zone-redundant premium services would not help.

So, what can network managers and enterprises do? Perhaps the most important things to do are to understand the operational vulnerabilities that could arise with a particular outage and keep pressure on the providers to improve their processes to avoid future outages.

With regard to the first point, the complexity of modern apps makes it hard to even know how an outage might impact an organization. Many applications today are built using numerous components and services, some of which are third-party offerings.

So, a bank might support many services, like the ability to check an account balance from a cellphone app via on-premises systems. But an online loan application might crash if the bank uses a third-party credit-worthiness database running on a cloud provider experiencing an outage.

Last year, such interdependencies were significant when Facebook experienced a six-hour outage that took down numerous applications and services that used Facebook credentials for logins.

These instances and others, where there are complex and often hidden dependencies, are driving demand for modern observability tools that help enterprises better understand the interplay of the various components that make up their apps and services. Such tools are also helpful in identifying root cause problems in today’s distributed and interlinked applications.

Focus on the providers

The cause of that Facebook outage, like the CloudFlare, Google Cloud, and many of the major outages over the last year, was due to a faulty configuration change.

After diagnosing the problem, Facebook stated: “Our engineering teams have learned that configuration changes on the backbone routers that coordinate network traffic between our data centers caused issues that interrupted this communication. This disruption to network traffic had a cascading effect on the way our data centers communicate, bringing our services to a halt.”

The outage resulted from a misconfiguration of Facebook's server computers, preventing external computers and mobile devices from connecting to the Domain Name System (DNS) and finding Facebook, Instagram, and WhatsApp.

Perhaps the biggest lesson enterprise IT managers should take away from that outage is for companies to “avoid putting all of their eggs into one basket," says Chris Buijs, EMEA Field CTO at NS1. “In other words, they should not place everything, from DNS to all of their apps, on a single network.”

Specifically, companies should use a DNS solution that is independent of their cloud or data center. If a provider goes down, a company will still have a functioning DNS to direct users to other facilities.

That thinking has brought more attention to the core DNS system. All internet and cloud services are in trouble if anything happens to the 13 DNS services worldwide. That point has been embraced by the Internet Corporation for Assigned Names and Numbers (ICANN), the organization running the DNS system.

Unfortunately, there are both technical and political issues to consider when it comes to the DNS system. The Ukraine war raised the possibility of using the DNS system to isolate Russia, cutting its websites off from the Internet. That request was rejected. "In our role as the technical coordinator of unique identifiers for the Internet, we take actions to ensure that the workings of the Internet are not politicized, and we have no sanction-levying authority,” said Göran Marby, ICANN president and CEO. “Essentially, ICANN has been built to ensure that the Internet works, not for its coordination role to be used to stop it from working."

On the technical front, the Internet Assigned Numbers Authority (IANA), which is a group within ICANN that is responsible for maintaining the registries of Internet unique identifiers powering the DNS system, conducts two third-party audits each year on different aspects of its operations to ensure the security and stability of the Internet's unique identifier systems. The reviews cover infrastructure, software, computer security controls, and network security controls.

Additionally, strengthening the security of the Domain Name System and the DNS Root Server System is one of the key tenants of ICANN’s five-year plan released in 2021.

Key takeaways

Enterprises are dependent on Internet and cloud services. Unlike the days of old, where a company could have a backup telecom provider, in most cases, there is only one provider today for any task at hand.

There are very few options to minimize the impact of a major outage. In some cases, there may be premium high-availability offerings that help if a provider’s regional center goes down. But many providers do not offer such services, and most often, the outages are due to configuration errors that quickly propagate globally, so those services do not help.

The best enterprise network managers can do is to use monitoring and observability tools and services to know when an outage has occurred and how it impacts their applications and services. The only other thing to do is pressure the providers to develop and carry out best practices to help avoid major service disruptions.

Related articles: