Cloud computing, once the latest and greatest trend in the digital world, has become so prominent it takes little explanation. Like the personal computer, the use of datacenter-centric compute architecture is so ubiquitous, it’s now a firmly established principle in the world of IT. But times have drastically changed.

We now generate incredible amounts of data at the edge - branch offices, manufacturing facilities, retail stores, restaurants, oil rigs, and vehicles. Sending this Internet of Things (IoT) and sensor data to the cloud for processing is becoming expensive and introduces performance bottlenecks and security challenges. In an ironic twist of fate, with edge computing, we are going back to placing compute close to where data is created like we did in the era before the cloud. In fact, the proliferation of edge computing is so great that Gartner predicts ”by 2025, more than 50% of enterprise-managed data will be created and processed outside the data center or cloud.” Ultimately, edge computing is moving from just a compute node at a remote location to an ecosystem of resources, applications, and use cases, including 5G and IoT. Moreover, there are even different types of edge computing! The National Institute of Standards and Technology (NIST) also defines fog and mist computing. The distinctions are foggy indeed. Pun intended.

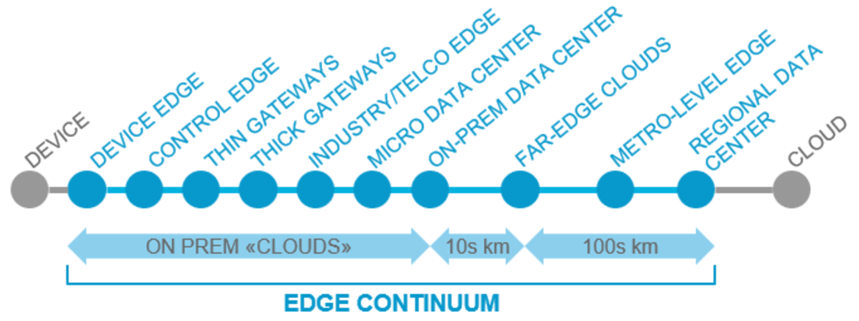

Ultimately, edge computing is a continuum from end-user devices to IoT appliances, to routers/gateways, to service provider infrastructure like 5G cell towers with co-located micro data centers. Software AG Technology Radar for 5G Edge captures this succinctly in the graphic below.

Immediate benefits that edge computing provides to distributed enterprises include:

- Reduced Latency and Increased Performance – Milliseconds make a difference. Placing compute power near the edge boosts performance for many applications

- Security – Data is processed within the confines of an internal network, relying on existing infrastructure security controls

- Cost Savings – By doing the bulk of processing at the edge, the flow of data is optimized, providing bandwidth and cost savings

- Remote Reliability – By relying on local processing of the edge system, intermittent connectivity issues are reduced, which is especially critical for bandwidth-constrained environments of an oil rig or a forward-deployed military unit

- Rapid Scalability – Edge locations can quickly deploy additional computer nodes and IoT devices to scale their operations without having to rely on a central data center.

What about “fog computing” and “mist computing”? Fog computing is a subset of edge computing that usually refers to computing that takes place in the immediate vicinity of the user, where data is created. Contrast this with a mini data center deployed outside of a factory, which is “edge computing,” but too far away from the user to be considered “fog computing.” Mist computing has more to do with intelligence of the actual infrastructure devices. NIST SP 500-325 “Fog Computing Conceptual Model” defines mist computing as a “lightweight form of fog computing that resides directly within the network fabric at the edge, bringing the fog computing layer closer to smart end-devices.” This is especially applicable to modern networking, where we see a shift from manual box-by-box configuration to a controller-based, declarative model. Network controller defines the end state, and underlying devices have the intelligence to get there. The controller does not tell network devices “how” to accomplish the task.

What about the drawbacks of edge/fog/mist compute? One drawback is also security. With many "smart" IoT devices, we now have an increased attack vector on the network. IoT devices are notorious for rarely being patched and sometimes even being abandoned by manufacturers. Another problem is that IoT devices can establish out-of-band management connections to the outside of your security perimeter. This is especially problematic in Industrial Control Systems (ICS), where the Purdue Model prohibits network traffic traversing the industrial DMZ without a jump server.

Another issue is that edge computing requires more hardware at the edge. For example, an IoT camera would ordinarily send its video feed to the public cloud for analysis. However, in an isolated edge use case, we would need to deploy a beefy compute system locally to run motion-detection algorithms. This compute density problem grows exponentially as we add more devices and sensors to the mix. The problem is further exacerbated when we add space constraints, exposure to extreme temperatures, dust, humidity, and vibration.

What we see in the market right now is a need for dense and modular small form factor systems that addresses the needs of edge, fog, and mist computing. It also needs to be secure, rugged, and have the compute power and flexibility to be configured to that can run a variety of workloads, including AI/ML. Dell has answered the call by expanding its popular XR line of ruggedized servers with the Dell PowerEdge XR4000 family of rugged multi-node edge servers. The new product was unveiled on October 12 as part of Project Frontier.

With its unique form factor and multiple deployment options, the XR4000 server provides the flexibility to start with a single node and scale up to four independent nodes as needed. Depending on the requirements of various workloads, deployment options can change; for example, a user can add a 2U GPU-capable sled. The same sleds can work in either the flexible or rackmount chassis based on space constraints or user requirements. PowerEdge XR4000 offers a streamlined approach for various edge deployment options based on different edge use cases. Addressing the need for a small form factor at the edge with industry-standard rugged certifications (NEBS and MIL-STD), the XR4000 ultimately provides a compact solution for improved edge performance, low power, reduced redundancy, and improved TCO.